Guide to Understanding AI Applications that Drive Business Value in MedTech

Greenlight Guru recently surveyed more than 200 MedTech professionals to find out whether they were using AI in their work and what they thought about its potential to help their business.

When we asked how AI could have the biggest impact on their business, the top three answers were:

-

Analyzing industry data

-

Automating processes

-

Searching or summarizing regulatory requirements

Those survey results tell us something quantitatively that we already know from experience—this is an industry with an immense amount of data at hand, but MedTech professionals need better, more efficient ways of finding, understanding, and using that data.

At Greenlight Guru, we’ve spent a decade building MedTech-specific solutions for our customers, and the AI solutions we’re developing (and have already launched) are based entirely on the needs of this industry.

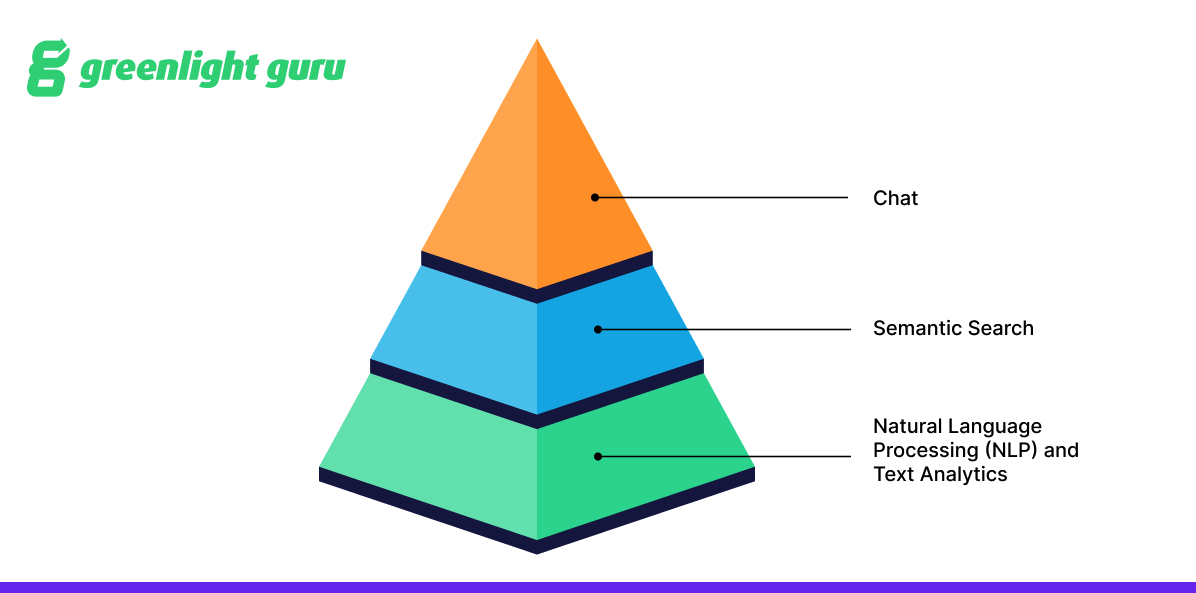

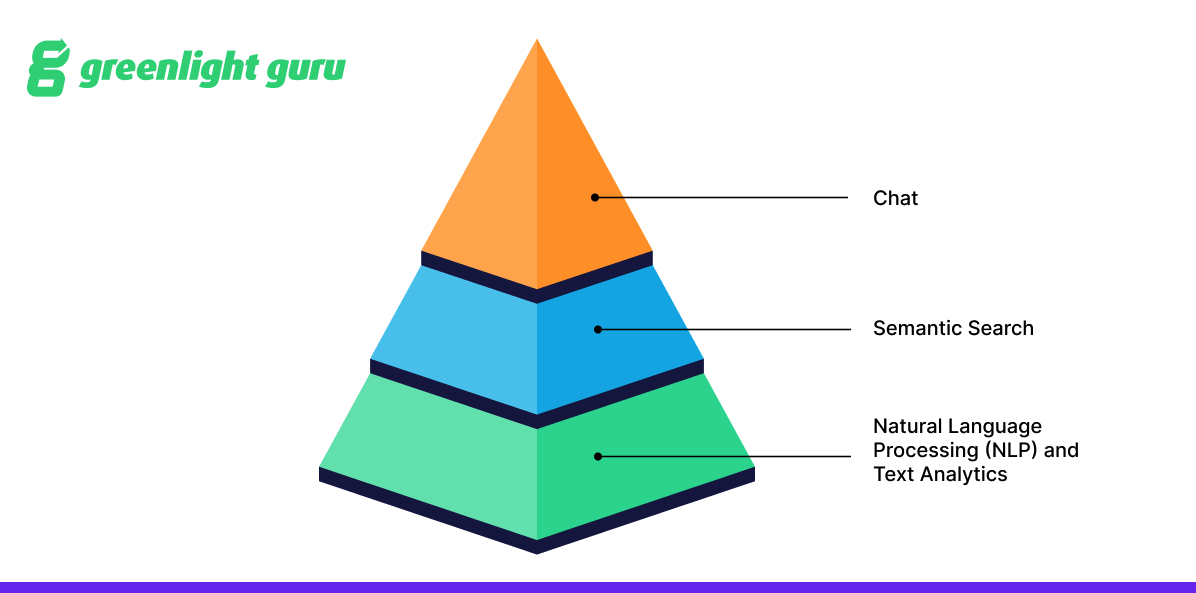

So let’s talk about the ‘how’ behind these applications. We believe the AI applications that will drive real value for our customers long-term—and what we’re working on right now—are built on three core AI use cases:

-

Natural Language Processing (NLP) and Text Analytics

-

Semantic Search

-

Chat

The choice of a pyramid is no accident, as each of these use cases supports what’s above it and relies on what’s below it to reach its full potential.

In this guide, I want to walk you through each of these AI use cases, how they interact with each other, and how they provide a foundation for some really exciting applications in MedTech.

Text analytics and natural language processing (NLP): Turning data into insights

Let’s start by looking at the foundation of our pyramid: natural language processing (NLP) and text analytics.

Natural language processing is a branch of computer science that’s concerned with helping computers read and generate language as a human would. You’ve almost certainly interacted with a product that uses NLP, such as Siri or Alexa or, more recently, a Large Language Model (LLM) like ChatGPT.

The vast majority of the world’s data consists of unstructured or semi-structured text documents, such as PDFs and HTML pages. Text analytics uses NLP to take unstructured text, like what you find in guidance documents or scientific literature, and extract structured knowledge from it for use in downstream applications.

For example, we could use text analytics to analyze a database of thousands of documents and determine keywords, topics, concepts, or facts and their prevalence within the database. Obtaining that structured knowledge is important because it allows us to generate insights, improve the performance of search engines, and enable other forms of automation.

In the past, these NLP tasks were deeply complex and required custom models with high-quality data labeled by humans. LLMs are so disruptive because they speed up our ability to extract structured knowledge from unstructured text. We simply prompt the model to render the knowledge we want from the source text—reducing the time these tasks take from months to minutes.

The ability to turn that unstructured data into structured knowledge is key to generating insights from it—and it makes the next tier of our pyramid possible.

Semantic Search: Getting users with answers when and where they need them

Think for a moment about the massive amounts of data available in FDA databases, scientific literature, guidance documents, and even within your company's own QMS. Historically, these data sources have been highly siloed and frustrating to navigate—but AI is changing that.

Using an LLM, we can analyze vast amounts of unstructured data from those sources, organize it, and make it accessible for downstream advanced analytics and search applications.

The super-power behind these capabilities is the next step on our AI pyramid—semantic search.

Semantic search works by attempting to understand the searcher’s intent and the contextual meaning of the terms that appear as a result of the search. You’ve used semantic search with tools like Google Search, which began emphasizing natural language queries and context and meaning in 2013.

There are a number of benefits to semantic search, the most immediate being that you no longer have to hope that there is an exact keyword match to your input within the data that you’re searching. Instead, the model is able to decipher the meaning behind your query, combine it with other information such as user history, and return the most contextually relevant results.

Fortunately, AI powered semantic search is no longer the exclusive domain of the tech giants. At Greenlight Guru, we’re applying semantic search to making the world’s medical device knowledge more accessible. It’s a capability we’ll be infusing throughout all our workspaces—allowing users to find the information they need when they need it.

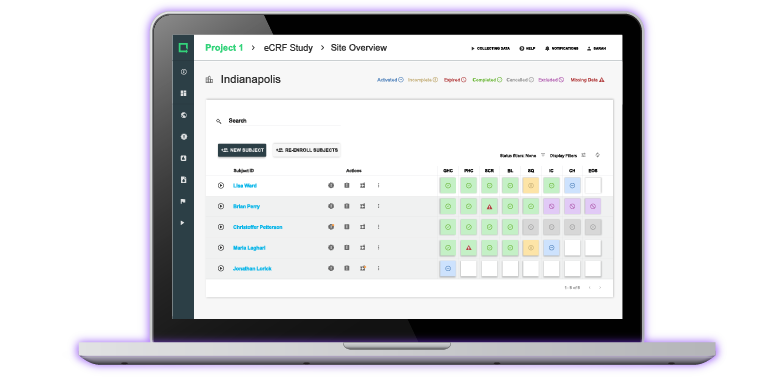

In fact, we recently introduced our first semantic search feature, which helps users identify the best IMDRF code associated with a particular hazard or harm as they build out a risk matrix in our Risk Management workspace. Coding of risks can be slow and labor-intensive, and traditional keyword search is typically unhelpful without an encyclopedic knowledge of IMDRF codes and terminology.

A traditional keyword search for an event like “shattered femur”, for instance, would return zero results. But the semantic search model doesn’t have those limitations. Instead, it would correctly associate this event with the IMDRF term “bone fracture.”

As a user builds out their risk matrix—with dozens or even hundreds of potential hazards and harms—semantic search will save the user enormous amounts of time that would have been spent scanning thousands of potential code options. Users can even get predictions for relevant hazards and harms from long-form descriptions of hazardous situations and foreseeable events.

As impressive as semantic search can be, it’s still not quite the peak of what we can do with this technology.

Chat: Bringing it all together

The capstone of our pyramid is a chat application. We can think of chat as the ultimate form of knowledge retrieval, made possible by semantic search applied to a repository of high-quality, structured knowledge.

If you’ve used ChatGPT at all, then you already have a good basic understanding of why this is the top of our pyramid. For example, instead of doing a Google search on, say, “how to clean your rug,” and reading through the top 10 search results, an LLM-agent like ChatGPT can simply provide you with an answer.

But there’s a risk with the answers that LLMs generate: hallucination.

When LLMs hallucinate, they provide false information in response to a user’s prompt, but express it confidently and fluently. When the answer a user is looking for is trivial, hallucination may not pose much of a risk. But when it’s essential that a chat agent generates the right answer—or admits it doesn’t know—then hallucination is a huge problem.

To solve it, we’re using what’s known as retrieval augmented generation (RAG). With RAG, the LLM is prompted to reference a searchable index of high-quality, factual information before it generates its answer. This is like asking an LLM agent a question, and then passing it the relevant guidance document and telling it to model its answer only on the information provided (rather than making up an answer on the fly). Of course, in order to get a database of high-quality, factual information, you need to have a strong data mining approach to extract facts from raw factual sources.

So, in a way, you can look at this pyramid as an answer to the question, how do we solve the hallucination problem? The answer is by architecting the foundation of our AI system like a search engine that only pulls from high-quality sources.

The Chat interface simply removes the friction from searching that information and accelerates the process of discovering, learning, and solving practical problems.

AI in MedTech: Looking ahead

So, that’s the path forward.

The question now is, how are we going to use these core AI capabilities to provide real business value in MedTech?

What I want you to consider is what Greenlight Guru has been doing for the past decade. We just celebrated our 10th year of helping MedTech companies modernize quality management, accelerate design and development, and keep up with industry changes to deliver high-quality devices to patients while lowering risks and costs. We’ve been laser-focused on the medical device industry since day one, and that’s not changing.

The reason I mention our past is because of the incredible amount of knowledge we’ve gained from working with our customers over the past decade. When we talk about semantic search or adding AI into our workflows, we’re not talking about throwing a chat widget on top of guidance documents or our QMS software and calling it a day.

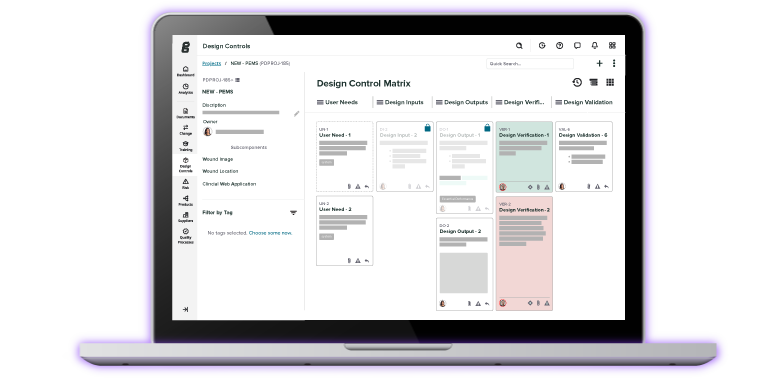

We’re talking about revolutionizing how our industry works. That means making a company’s own QMS and design tools more searchable, interactive, and proactive—integrating real-time feedback, and augmenting it with the most relevant public data sources tailored for their specific products.

And the exciting thing is that this isn’t just where we’re going at Greenlight Guru; it’s where we’ve already gone.

We took our first big step in May of this year with Risk Intelligence, but that really is just the beginning. That drip of AI applications is going to turn into a steady stream, starting with semantic search as well as our own specialized chat applications for regulatory intelligence and customer support.

As we continue to strategically add AI components to our software, know that it will always be purposeful and driven by our customers’ needs, regulatory requirements, and industry best practices.

Learn more about how Greenlight Guru is moving MedTech forward, and get your free demo of our software today ➔

Tyler Foxworthy is the Chief Scientist at Greenlight Guru, where he applies his expertise in AI and data science to develop new MedTech products and solutions. As an entrepreneur and investor, he has been involved in both early and late-stage companies, contributing significantly to their technology development and...

Related Posts

AI, Automation, and Risk in MedTech

Meet the Guru: Building Quality Management in Early-Stage Medical Device Companies

What "Exempt" Means with Respect to Medical Devices & Regulatory

Get your free PDF download

ChatGPT Sample Prompts for Risk Evaluation of a Medical Device