Human factors and risk create a lot of confusion in the medical device industry. What do you need to do from a design control perspective?

Today’s guest is Mary Beth Privitera, principal - human factors at HS Design and AAMI Human Engineering Committee co-chair. She describes the similarities and differences between human factors vs. design validation.

LISTEN NOW:

Like this episode? Subscribe today on iTunes or Spotify.

Some highlights of this episode include:

- What constitutes human factors validation? It involves an international standard and FDA guidance on rules that medical device professionals need to follow.

- Design validation focuses more on what’s expected during the audit of a human factors file, if you have a product that is a high risk for harm or injury.

- Most companies don’t know how to intertwine human factors within design and development practices. Use common sense to make a good product design.

- FDA helps developers understand what they need to do and document early on during the design process to avoid validation issues with a product later on.

- Past sales criteria is a major difference between design and human factors validation because it’s difficult to define and measure ease of use.

- Understand all elements that could impact a product’s design. Study your users and use environment before identifying and fixing design issues.

- A task analysis identifies each step users need to take and serves as evidence that you considered their needs and made necessary compromises.

- Mary Beth busts myths related to human factors and design validation, such as needing three people to evaluate and provide feedback on your product’s design.

Links:

FDA Guidance on Human Factors and Medical Devices

Quotes by Mary Beth Privitera:

“If you were to have a product that is high risk of harm or injury, there going to want an audit of the human factors file.”

“I think that’s part of the confusion. Do you separate out your documents between design validation and human factors validation?”

“As a rule, I think you have to go back to common sense. What makes sense? Human factors is really all about making a good product design.”

“A lot of companies really are not necessarily paying attention to the standards that are out there.”

Transcription:

Speaker 1: Welcome to the Global Medical Device Podcast, where today's brightest minds in the medical device industry go to get their most useful and actionable insider knowledge direct from some of the world's leading medical device experts and companies.

Jon Speer: You know, sometimes there's a lot of confusion on things like design controls and human factors and risk and all those sorts of things and what do you need to do and is this human factor stuff, is this above and beyond things that you do from a design control perspective? This episode of The Global Medical Device Podcast, my guest Mary Beth Privitera, Principal Human Factors from HS Design dives into the differences and the similarities between human factors validation and design validation, so take a listen and enjoy this episode of The Global Medical Device Podcast.

Jon Speer: Hello and welcome to The Global Medical Device Podcast, this is your host founder and VP of Quality and Regulatory at Greenlight Guru Jon Speer and today I have somebody I feel like I've known for a long time, somebody I feel like we're kindred spirits at the very least, [chuckle] but Mary Beth Privitera... Mary Beth is a principal on human factors with HS Design, so Mary Beth, welcome.

Mary Beth Privitera: Thank you, thank you for inviting me and yes, we are kindred spirits. I feel like we've known [chuckle] each other for a long time as well, it's great.

Jon Speer: Alright. So obviously the human factors expertise, we're gonna dive into that topic a little bit today. And something that I hear a decent amount, I suppose, there's a lot of confusion on this topic, I'm sure you hear that in your role, but specifically, I think people get really confused about the relationship between design validation and human factors validation.

Mary Beth Privitera: Yes, I hear that often and in fact, it's one of the hottest debate that we have in the committee. I'm currently Co-Chair of the AAMI Human Factors Committee and it's one of the things that we discuss in terms of best practices of how do we handle the integration of human factors within design control and then the difference between what is design validation and some of the use aspects that get involved in design validation versus straight-up human factors validation, and what's the go-between? And then, that actually leads into, can there be design in human factors validation within a clinical trial? Some of the advanced technologies you don't necessarily have a choice, but to do things in context, so it gets to be a little bit confusing and a little bit muddled.

Jon Speer: Yeah, so on this podcast, what we try to do is, we try to I guess, provide tips and pointers and helpful information for those who might be trying to tackle this topic. So, I guess before we dive too deep into the conversation any tips that come to mind?

Mary Beth Privitera: Yeah. Well with human factors validation, that's your last study and so, there's some specific rules around what constitutes the human factors validation. So it might be a good starting point to just kind of clarify what it is to do a human factors validation, that is both an international standard and it's in the FDA guidance. And the two of them, I'm referring to IEC-62366 and then the human factors guidance that the FDA put forward, and both of them have really great rules that you can just follow. And one of them is that you have to do your human factors validation in the actual environment that the product is going to be used and then you have to have the representative user and that the tasks that you are giving them are based on the relationship to harm, 'cause all goes back to your risk. So it's typically just in a nutshell, go into the users' environment, have the users do it and then have 15 people. FDA says that you have to have 15 US citizens, whereas IEC guidelines don't have that strict regimen. So that is in a nutshell what constitutes human factors validation. In the design validation I think it has a different definition.

Jon Speer: Okay, so why do you think design validation is different, 'cause as you were describing, I'm like, "Alright, that's a criteria for design validation, yep, that is as well," so what do you think then?

Mary Beth Privitera: Well, I think... So, I think when it gets down to the what's expected in the audit more so than a distinct difference. So if you were to have a product that is high risk of harm or injury, they're gonna want an audit of the human factors file. And if it's a low-risk product you may not ever get audited for a human factors file and it would just be expected to be in there, and I think that's part of the confusion is, do you separate out your document between design validation and human factors validation?

Jon Speer: Right. And that's a topic that comes up from time to time, and I think that the design control regulations, although still confusing for a lot of folks, they've been pretty well established, well there're in the regulations. You might find that 30 from an FDA perspective. It's pretty well-defined in 1345. And I know you mentioned there are standards and guidances on human factors, like IEC-62366. But I think that creates some of the confusion is that people don't know how to dovetail or intertwine their human factors within their design and development practices. Would you agree?

Mary Beth Privitera: Oh, absolutely, absolutely, I would absolutely agree. And then I think too, because there's an amount of confusion, you get inundated with people saying, "Well you have to do this, and you have to do that, and oh, you're missing this, and oh you're missing that." And I think that absolutely gets further confusion. And as a rule, I think you have to go back to common sense, what makes sense? And human factors is really all about making a good product design, making the product design, making something that people want to use, making something that they can use. So, it's from a marketing standpoint, it makes absolute sense of why you would do it. When it gets down to the documentation, you could label everything as design validation, and have human factors validation in it. The challenge is, is that if you have a higher risk product that has... And in your risk analysis you've identified several areas of critical tasks that are, that can cause harm, well then you need to submit a separate dossier to the FDA, which demonstrates that human factors have been addressed and that the critical tasks as determined by risk, are acceptable and that you've mitigated as many as possible.

Jon Speer: Yeah, and as we're talking, I'm thinking I try to be a pragmatist, especially when it comes to what I do and when I do it, from a design and development standpoint. So design validation, human factors validation, there is some similarities there, for sure, and I understand some of the differences as well. But I wanna kinda back up towards, let's just say when I'm going through the design and development planning efforts, what is it that I can do more toward the beginning of my project to help put me in a better position for these downstream activities?

Mary Beth Privitera: Yeah, that's also in the guidances. They'll tell you that you need to do user reviews before you get to that final validation, and that can be... It's very loose in the guidance up there, and I think people don't realize that they need to write it down. So what we often find is that someone will come to us to, come to HF Design to run a validation study, and they may have gone to a conference, and they've gotten user feedback, but they didn't write it down. And that user feedback, that's exactly what they want you to do prior to getting to your validation, but that should be written down as a study. You can conduct formative usability studies where you're bringing in prototypes, and you're having the users review them, and that could be written down, just like your design validation. And the one thing that is different between design validation and human factors validation, is pass-fail criteria, because it's hard to measure usability. Measuring usability is a little bit like measuring love, you know, it's not easy.

Jon Speer: Yeah. It's gotta be easy to use. How do you measure that with acceptance criteria?

Mary Beth Privitera: Yeah. And that's another bone of contention. I think that we don't necessarily have, 'cause we go following down that path. If you have, you're gonna meet this requirement, then you have a level of acceptance, right? And you've got a high degree of variability with your users. I mean, even if you took one homogeneous use group, only let's say anesthesiologist use it, well not all anesthesiologists have the same skill sets. Not all users have the same capabilities. They may have some disability even that you're trying to design for if it's a consumer-based product. And that's where it becomes a little bit tricky, and there isn't really any one answer that's simple at that point in time when you're looking at it. I will say increasingly, when I look at documentation coming from the agencies, they are looking for some type of criteria, and it's hard to run a test without criteria because... But it's qualitative in nature.

Jon Speer: Yeah. Well, and as... I like to try to solve or, and maybe some people might argue, put things in a design control bucket, maybe things that don't always belong in a design control bucket, but kind of the classic example of a user need is something along the lines, oh, my product must be easy to use, and if you capture that, I get that. Really hard to address from a design validation standpoint, but that might be the type of user needs that you would use with human factors validation to address. You know, of course, you want to put some more definition around that, as you go through the design and development process. But would you agree that when you're capturing user needs, that they don't all have to be clearly identify acceptance criteria, at least at the user need stage, would you agree with that?

Mary Beth Privitera: Oh, absolutely and I don't think that there's a product known to man that doesn't have ease of use as one of their fundamental criteria. [chuckle] That's a given. I'd like it to be easy to use, but the challenge gets to be exactly what you pointed out is, what defines ease of use? And what we as engineers and as designers think is easy to use may be completely and entirely different than what a user needs might be. And inherent in some of the technologies, of the advanced technologies, they are hard to use, and they're pretty complex systems, because what they're trying to do is complex. So it's not inappropriate for it to be a challenge to use, and it could be acceptable, because that's what the technology or the market needs to be. Now, consumer products, I think that's a completely and entirely different story.

Mary Beth Privitera: So I would say that as a rule, trying to define what makes ease of use, and that gets to that testing as you go through that design process to improve your likelihood of success at the end, there's always elements of time. There's always elements of fit. Does it fit into their hand if it's supposed to fit into their hand as an extension of the body? Do they understand and comprehend, does it give them feedback? So there's an inherent loop that every product has where you do something and then the product has a reaction to it, and maybe it acts some tissue in a certain way or it measures something, gives you feedback, it has a ding, and that tells you to do something about it. So can they understand that? So time, their feedback, do they know what to do with the signal? Is the signal appropriate for the context? Those are some of the ways that you can define ease of use and put that into your human factors validation study.

Jon Speer: Well, as we've been talking and I feel I might be remiss if we didn't maybe give the audience a clear picture of, I guess, the continuum or the whole flow of the types of activities and behaviors that one might be expected to provide as part of their design and development efforts from a human factors perspective. Yes, we talked about human factor validation, but that's a pretty late stage activity. There's kind of a build-up to that human factors validation. Can you take a moment and maybe talk about some of the earlier activities that one might be expected to consider from a human factors perspective?

Mary Beth Privitera: Yeah, so I can start from an... I'll give you an ideal picture, which is very rarely the circumstance because we don't live in an ideal, perfect world, but let's just say that we did. And I think the perfect scenario would be that, you would study your users in the use environment prior to the onset of a design problem; before you tackle it. Almost everyone knows, okay, I wanna be in a particular genre, let's say it's in the OR, or it's a drug for a particular disease state, and you can study the patients, you can have the, how do they get their medications? How do they get the flow? So understanding all of the elements that could potentially impact that design and defining really who that user is, and by that I mean, what are their capabilities? What are the typical educational background? What are their physical capabilities? Do they have disabilities? Do they not have disabilities? And truly understanding from a cultural, anthropology perspective who your user is and then, what are the environment that this product is going to be touching? So, it's in essence taking a day in the life of your proposed product and looking at, what are all the elements that it touches? What are all the stakeholders that it touches, from the scrub nurse that has to pull it off the shelf or the pharmacist that has to pull it off the shelf to its actual use and delivery and disposal?

Mary Beth Privitera: So taking that perspective, now I can understand my user and I can understand my environment, and I can work with, what are the criterias? What are the fundamental tenets that happen within that user? So, does the user always behave in a certain way? Can I look for those patterns that will then impact the design while I'm in that design process? And then, so that's where it starts. That's called contextual inquiry, that whole process and research methodology. Then I start designing something, and here's where a lot of companies really are not necessarily paying attention to the standards that are out there. So there's a great standard that AAMI has, AAMI HE75, it's a design standard. It's the only one in the world that's a design standard. So if I said, say if I have a hand tool, I can go on in that design standard and I can look up controls. I can look up feedback. I can look up feedback modalities, and see what's going to be the best in terms of overall just human use capabilities that I can identify in my product design, and that's truly applied human factors at that point in time, putting in into that design, and then from there I would go and get users to agree or disagree. Did I really apply HE75 principles to the design correctly? Is that truly... Where are the falls, the downfalls? And what I haven't mentioned is risk in there.

Mary Beth Privitera: You can bring it in, do risk, the other part to do within your contextual inquiries is to do what's called a task analysis. A task analysis breaks down everything from each step into its components of that step, what does the user have to do. And you can do an analysis to say, will the user be able to understand what they need to do next? So once you have a design, you can apply some of the human factors, methodologies, and tools to assess how usable is it while you're still in that designing process before you talk to users, and then you can often go and talk to users, and that can be super casual, but it needs to be written down just to build up that dossier and that evidence that you've considered these user needs along the way and you've considered what their opinions are and make changes, 'cause it's easier to make changes earlier rather than later. And then that builds up into your final summative usability for your human factors validation study. So that's in the ideal space, and I find typically there's compromises along the way because I think product development is a series of compromises of what do we have and how can we meet our budget and our timing considerations?

Jon Speer: Alright, that's a really good summary. So, just a couple of myths or myth. We'll go into a round of myth-busting, so to speak and I've never done this before on the Global Medical Device Podcast. So first, myth or truth, I guess, you decide. I have from a human factor standpoint, I have to do something that's completely orthogonal and separate from my design history file and from my risk management file. Truth or...

Mary Beth Privitera: That's myth, myth, myth, myth, myth. It's to be integrated, but you do wanna put a little special marker on there. You do wanna be able to... If I... You wanna have a... Just be a little clearer on if I was to be audited, I don't think... If it's a class one product that's low risk, it's not significant risk or and IRB, and you can judge that based on your risk analysis then integrate the whole thing. That's no reason to have something separate. If it was high risk and I'm going to have to put a dossier together, you need to earmark it, because you will have to know what those documents are.

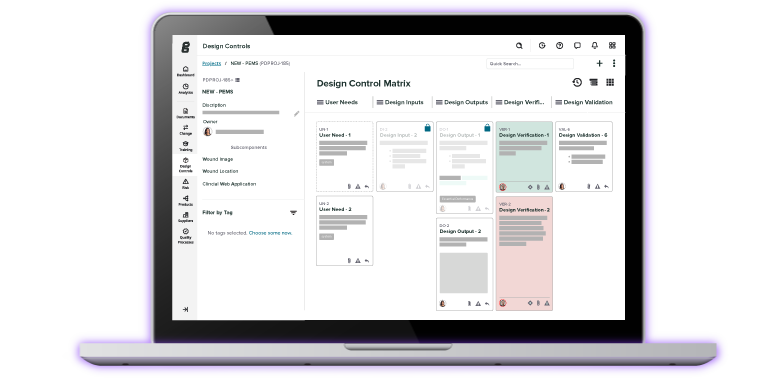

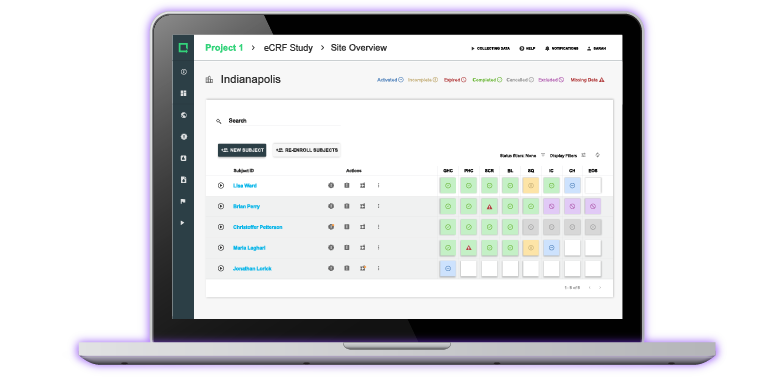

Jon Speer: Alright, so first I wanna remind you I'm talking to Mary Beth Privitera. She is a Principal at Human Factor at HS Design can learn a whole bunch more about HS design by going to HS-design.com, a really great firm and a partner of ours at Greenlight Guru. And while we're talking about human factors and design control and risk, I wanna remind you all that Greenlight Guru, we have an EQMS software platform designed specifically for the medical device industry by medical device professions. And certainly when you're dealing with the design controls and human factor myths, those are all activities that you can manage, including the traceability of these activities all within the Greenlight system, and yes, the point that Mary Beth, you just made about being able to to tag or flag human factors items that's absolutely part of the work flow in the Greenlight system. Alright, so the next myth, and it sounds like somebody is happy in the background, so...

Jon Speer: It's okay, but the next myth is, not every device requires human factors, is that truth or is that myth?

Mary Beth Privitera: Not every device requires human factors? I'd go with that's a myth. I have no idea why you would ever want a product that's not usable. If it's not usable, no one's going to want to buy it, they may have loved the technology. From my perspective, which obviously has a very biased approach, every product should have usability in it's consideration.

Jon Speer: Alright, a couple other things I thought we could cover before we wrap up today's podcast. The first thing that you mentioned earlier, is about having basically a group of users involved in your human factors validation, and I was reminded of a conversation that I had earlier this week with a product development expert and we talked a little bit about that topic, specifically, a lot of start-ups these days seem to have been started by maybe an inventor, or entrepreneur, or a researcher, and in many cases they may that key opinion leader. They maybe have a very vested interest in the product so a lot of their activities have a bias towards what they believe to be right or based on their own personal experience and that sort of thing. And there's some flaws in that and that's what I liked about what you said, having a broader group use the product, don't just focus on a key opinion leader. Can you maybe elaborate on that more?

Mary Beth Privitera: Yeah, sure, so I think if you opened up any product development book you'd find that knowing your user and understanding from a very, very broad perspective, that's what they say to do. It just opens up the door. And I do enjoy working with key opinion leaders and having them influence the design. I think that they're absolutely important, they're amazingly critical. I always say that you need go three types of people to evaluate your design when you're in product development. You need an innovator somebody that's a forward thinker and that's typically your key opinion leader, they're on it, they are committed to it and they love it, but you also need a judger. You need somebody that no matter what you bring to them, they're gonna hate it and that's typically not the same person as your innovator. That's the key opinion leader.

Mary Beth Privitera: They aren't looking at it with the harshest of criticisms of, "No, I will never use that. That's a dumb idea. I can't believe this." And you want to talk with those people and engage them in a conversation, is to say, Why? Why do you have that opinion? Because if you understand why they have that opinion, then you can make a better design. And then there's a host of people that are just sort of on the side, the sideliner so innovators, judgers, and sideliners are who you need to talk to when you're developing your product just so that you can get a very, very broad opinion. So, a sideliner person might be just somebody that's waiting for a peer review journal to come out that says, "This is going to work." That they don't wanna be the first adopter but they don't wanna be the last one either. And you wanna understand their motivation, so taking them and taking all of those viewpoints into consideration. There's roles for the key opinion leader. The other part about a key opinion leader is usually they're really, really, really, really good. And you wanna design for the people who are less good.

Jon Speer: Yeah, I'm reminded of a project we worked on a few years ago or I say a few, probably like 15 maybe, maybe more like 18 years ago, but, anyway, a few years ago and I worked for a company where a lot of our products that we developed were position invented and oftentimes by those key opinion leaders. On this particular product it had a component of the device, it was a little tricky to understand, you probably required a lot of skill to be really adept at using that particular feature. And we started getting some feedback from some users after we had launched, that users were struggling with that particular component and it was surprising to us because the work that we had done to get the product to market, especially on and this is early days of human factor and I don't know that we coined the term at that time, but the key opinion leader was pretty much involved with a lot of the validation and activities, which looking back, a lot of flaws in that methodology.

Jon Speer: And so we contacted that key opinion leader and he is like, "Oh yeah, that thing is a problem all the time. I usually just do this, this, and this." But he didn't give us that feedback. And so we had to make some design modifications, very much after the fact. And so those are some challenges. The other thing I like about what you said about the judger, that seems like a person I wanna talk to pretty early on, and not when I'm doing design validation and human factors validation.

Mary Beth Privitera: Yeah, absolutely, absolutely, and I think that we know who the... You recognize immediately someone with those personality traits or those that they're grumpy that day... That's a happy person to talk to for product development, because in order for you to advance design, you need to have a negative conversation, which is the antithesis of sales. So don't bring your sales reps along with you, but certainly get people that are truly interested in evaluating that design from a very free and open perspective. It'll only make it better.

Jon Speer: Yeah, and folks do that early. I mean you don't have to have final product to get the feedback from these different user groups. In fact I would encourage you to maybe even just having a concept, you find those judgers early so that you can better design your product and use the user throughout the process. I think sometimes we get conditioned to only involve the end users of our product at certain stages and oftentimes late stages of the product development process. My advice to you is to try to incorporate user feedback throughout your entire design and development process. It's challenging. Folks like Mary Beth and HS design can certainly help facilitate that, so lean on experts like Mary Beth on that. You said earlier and just kinda glossed over it, but I wanna revisit it, because I think sometimes we're looking for black and white answers as far as how many of this and how many of that. When you were very specific with a number about how many people need to be involved in a human factor validation. You said 15, what is 15 all about?

Mary Beth Privitera: That's a good one. If you go to FDA guidance, there's a whole statistical justification for the number up to 15 in the human factors guidance that the agency put out for us, it's also in 62366, and in a nutshell, I think that that's where you get some statistical relevance, and it has to be 15 homogenous users. That's the only spot in the guidance that tells you the number of users, so to your point, you said, and we agree, that's our kindred spirit coming out, that you should involve the users throughout the process. It doesn't tell you how many users you should involve early, it only tells us how many we should have at the validation point, because you're not making any changes. At the end of a validation study, when you do your residual risk analysis, you're really only going to be able to change instructions for use, training at that point in time, or that's where you should be. If you have to have a design change 'cause something's gone awry, then you're back and you're gonna have to re-visit your validation study and retest at that point in time.

Mary Beth Privitera: So there's no magic number up until that point, and to your point, going out and talking to users, you don't have to be really, really formal about that approach. In fact, being informal is helpful because you wanna engage them in a conversation of what improvements do you like, and if you're very formal about it, people like to please each other, so they may not give you as harsh a criticism if you're in a formal setting 'cause they want you to do well. So you wanna have it to be casual, you want it to... You can take out prototypes, those prototypes might be on paper, they may be conceptual designs, they may be rough, or they could be a virtual experience, where you have them walk through something that's gone through extensive design. It just makes it a little bit more... You might have a more difficult time making design changes at that point in time.

Mary Beth Privitera: So the magic number 15 is what the guidance says, and I think it's somewhere, although I haven't been able to find this reference, but when I was in design school, very early on, there was a very old, wise professor that said patterns of behavior are gonna show themselves right around seven opportunities, and then be confirmed seven to nine opportunities, and then they'll be confirmed by 12, but that's not... I haven't been able to find that in a reference, so don't hold me to that, but that tends to be true, that you'll see a pattern of behavior somewhere between seven and nine, and then it'll repeat itself by the time you get to 15, so that's how I interpret their statistical number and that's how they get to that. Doesn't mean you need to do 15 users all the time, though.

Jon Speer: As you were sharing that bit about where 15 came from, I was thinking this is where I think human factors, if there's such a thing, this is where human factors gets a bad rap. Because my experience, mostly from the outside looking in, I see a lot of companies that, I don't wanna say they forget about human factors, but they don't give it its proper due diligence throughout the entire design and development process, and then they're quickly approaching submission or something along those lines, critical milestone, and then realize, "Oh crap, gotta do some human factors, so let's do this, so we can do this sort of thing."

Jon Speer: They didn't put the thought of human factors into earlier stages in the development, they get to that human factors, whether that be the validation study or some other type of activity, and they find out, "Oh my gosh, we gotta make this change and that change and this change." And so now, they're really thrust back into earlier phases of development and they have to spend more time, more money, things are delayed, and the curmudgeon engineer's like, "Ugh, it's 'cause I had to do human factors stuff," and it's like, "Whoa, time out buddy." It's... Bring in the human factors earlier, not that these later stream active become trivial, but my goodness, find out where the gotchas are as soon as you can, rather than waiting to do so very, very late in that process.

Mary Beth Privitera: Yeah, and I'll just go on that example. Let's just say that they went ahead and they did the human factor study, didn't perform very well, and they wrote a nice narrative to submit to the agency to... A nice narrative to wrap it up, and they go ahead and they go to market with it. At the end of the day, all the use errors that they solve in that study are gonna be the use errors that the then customer relations office, your adverse event, that's what they're gonna get back. You can just see a eye-for-eye, one-to-one relationship between... What do users do when they have that product, "cause no... Other than the training that you would normally provide... That's part of the validation study, is that you provide the level of training that they would get. So if it's none, they get no training, and then they rely on those instructions for use, and they may completely and entirely misconstrue something, not understand something. And that will increase your customer complaints. Some of the lower risk products, where you're not necessarily paying too much attention to the human factors, let's say, you didn't do it, and then you did your validation study. You came up with some errors, but maybe they weren't egregious. You can just plan on that, that's what you're gonna get the calls about.

Jon Speer: Yeah. Absolutely. And I'm gonna let that be the final word on today's podcast. Mary Beth, thank you so much. I've really enjoyed this conversation about the relationship between design validation, and human factors validation. Thank you so much.

Mary Beth Privitera: Thank you, Jon. Best of luck.

Jon Speer: Alright, folks. Absolutely. So, folks if you wanna learn more about human actors, the do's, the don'ts, I would encourage you to reach out to Mary Beth Privitera. She is a Principal in Humans Factors at HS Design, HS-design.com. And as I mentioned earlier, and we'll share with you again, be sure to go to Greenlight Guru, www.greenlight.guru, to learn more about the Greenlight Guru EQMS medical device specific software platform, and we'll help you through your design development activities, including human factors, and risk, and design control, so you should check that out. Thank you for listening. As always, this is your host, the founder, and EQMS Quality and Regulatory at Greenlight Guru, Jon Speer and you have been listening to the Global Medical Device Podcast.

ABOUT THE GLOBAL MEDICAL DEVICE PODCAST:

The Global Medical Device Podcast powered by Greenlight Guru is where today's brightest minds in the medical device industry go to get their most useful and actionable insider knowledge, direct from some of the world's leading medical device experts and companies.

Like this episode? Subscribe today on iTunes or SoundCloud.

Nick Tippmann is an experienced marketing professional lauded by colleagues, peers, and medical device professionals alike for his strategic contributions to Greenlight Guru from the time of the company’s inception. Previous to Greenlight Guru, he co-founded and led a media and event production company that was later...